Seven papers from the Division of Information Science at Tsinghua SIGS were accepted into the International Joint Conference on Artificial Intelligence (IJCAI 2020), an annual gathering of international AI researchers and practitioners. The conference is influential in the field of artificial intelligence, and only 592 papers were selected among 4714 submissions this year.

“Collaborative learning of Depth Estimation, Visual Odometry and Camera Relocalization from Monocular Videos”

Author: Zhao Haimei, Master’s student; Advisor: Yuan Bo

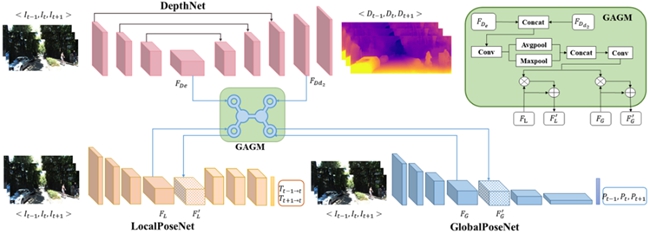

In recent years, rapid development of unmanned systems such as autonomous driving and drones has led to extensive research on environment perception using monocular vision. Zhao Haimei proposes a joint learning framework using three network branches: DepthNet, LocalPoseNet and GlobalPoseNet that can simultaneously improve accuracy and performance of depth estimation, visual odometry and camera relocalization.

Network structure diagram of the joint learning framework

"A Dual Input-aware Factorization Machine for CTR Prediction"

Author: Lu Wantong, Master’s student; Advisor: Yuan Bo

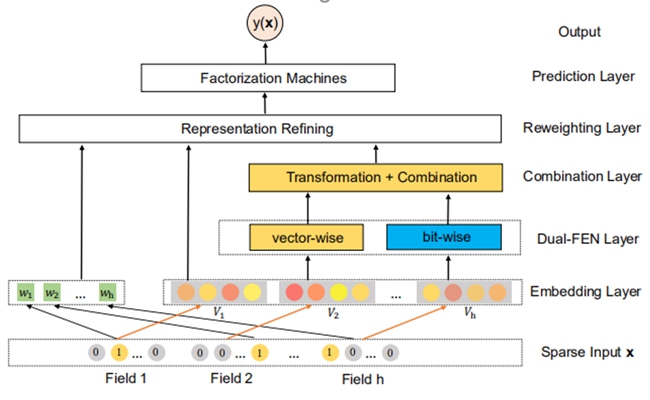

Targeting current limitations of Factorization Machines (FM) in CTR prediction, Lu Wantong proposes a novel model – a dual input perceptual factorization machine (DIFM) that learns input perceptual factors at both bit and vector levels, and can adaptively reweight the original feature representation. Comprehensive experiments on two CTR prediction data sets show that the DIFM model can outperform multiple latest models.

Overall structure of the DIFM model

"Clarinet: A One-step Approach Towards Budget-friendly Unsupervised Domain Adaptation"

Author: Zhang Yiyang, Master’s student; Advisor: Yuan Bo

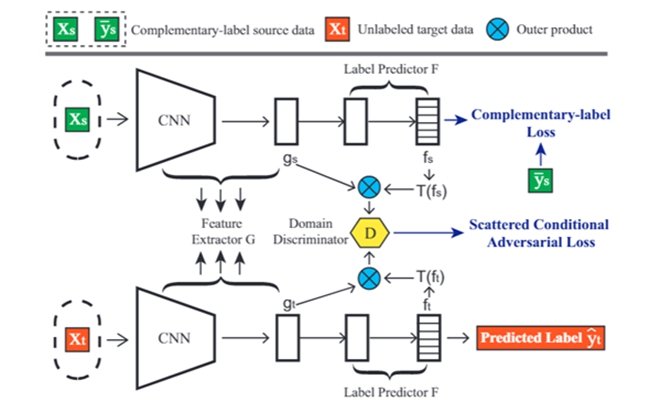

In her paper, Zhang Yiyang considers a new low-cost approach for unsupervised domain adaptation (UDA). The CLARINET model presents a simpler and cheaper approach by adding supplementary labels to samples, and can further promote the application of transfer learning. Experiments show that CLARINET can effectively use the source domain with complementary labels to achieve knowledge transfer.

Core framework of CLARINET

"Feature Augmented Memory with Global Attention Network for VideoQA"

Author: Cai Jiayin, Master’s student; Advisor: Yuan Chun

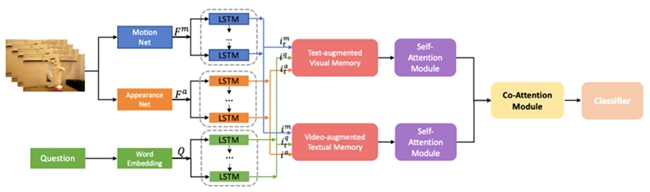

In the field of video understanding, methods based on recurrent neural networks (RNN) and self-attention mechanisms have achieved good results. To improve recall and timing in Long-short term memory (LSTM), Cai Jiayin proposes a cross-modal feature enhancement memory module. The module writes important information into the long-term memory component, and uses self-attention mechanism and cross-modal attention to interact with global high-level semantic information of vision and text.

Overall framework of the model

"HAF-SVG: Hierarchical Stochastic Video Generation with Aligned Features"

Author: Lin Zhihui, PhD student, Advisor: Yuan Chun

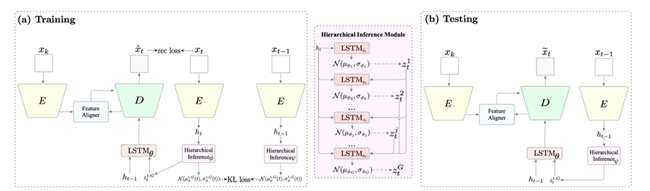

Lin Zhihui proposes a hierarchical stochastic video generation method with feature alignment (HAF-SVG) to solve problems such as object distortion and collapse in SVG. Validated in multiple synthetic and real data sets, HAF-SVG is significantly better than previous methods in terms of quality and quantity. Visual examples show that the hierarchical model can extract video representations with disentanglement properties, and allows better understanding of video information and analysis of key generation factors.

HAF-SVG flowchart

"Triple-to-Text Generation with an Anchor-to-Prototype Framework"

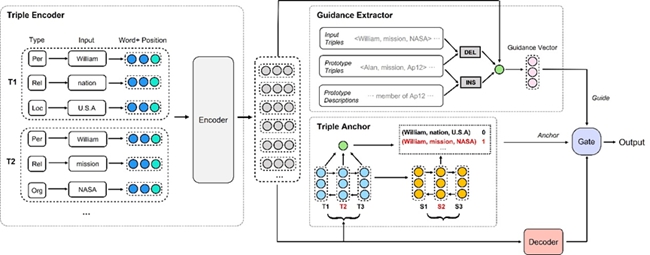

Author: Li Ziran, Lin Zibo, Master students; Advisor Zheng Haitao

In natural language generation, generating text from a set of entity relationship triples is a challenging task. In this paper, Li Ziran and Lin Zibo propose a new Anchor-to-Prototype framework which retrieves a similar set of triples and their corresponding description text in the training data, and extracts a writing template to guide the generation of the text. The framework further groups and aligns input triplets by sentence to better match and accurately use the template.

Anchor-to-prototype framework

"Infobox-to-text Generation with Tree-like Planning based Attention Network"

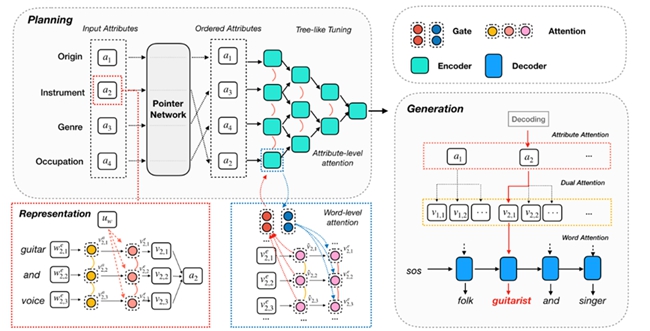

Author: Bai Yang, Master’s student; Advisor: Zheng Haitao

Bai Yang’s thesis proposes an attention neural network based on tree planning to improve automatic generation of text descriptions from structured data. The tree-like structure guides the encoder to fine-tune and integrate input information layer by layer to obtain better planning in text generation. Experimental results show that this method can effectively improve the quality of text generation and adaptability to out-of-order input.

Attention neural network based on tree programming

Cover design by Wu Yutao, supervised by Wen Xueyuan

Edited by Karen Lee